General Discussion

Related: Editorials & Other Articles, Issue Forums, Alliance Forums, Region ForumsWarGamer

(18,355 posts)AI "reads" 3 million articles about auto repair... tells someone how to change their oil.

30 Years ago, Bob at the Oil Pit saw hundreds of different makes and models in the shop to memorize where the pan drain was...

I don't get all the anti-AI paranoia

True Dough

(26,193 posts)so there's good with the bad.

I believe where the "paranoia" creeps in is due to the hundreds of billions being shelled out by tech companies in 2025 alone and that is expected to generate a lot more money for the 1 per centers who own those tech companies while the average Joe and Jill find their jobs in jeopardy due to AI taking over.

Then what?

WarGamer

(18,355 posts)There needs to be an "AI dividend" paid to all adults... or at least those in lower income strata. (UBI)

AI has a greater potential for good than anything in the history of the planet.

The logical endpoint is an end to the scarcity of goods.

When AI is running the combines, running the farm... corn yields go up XX%

But that means a functioning government to make sure the benefit hits the average person.

But we have a self-serving, corrupt government at the helm. By all rights, they should be tossed to the curb during mid-terms and in 2028, but A) a lot of damage could be done in the meantime and B) hopefully they don't find ways to rig elections (if they haven't already).

highplainsdem

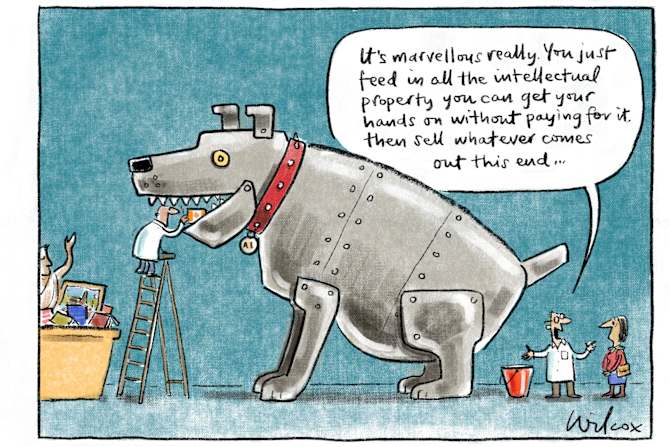

(61,057 posts)wealth, and just the IP theft makes it clear they're robber barons. And they're con artists, creating a dangerous bubble with hype and circular financing. They're every bit as bad as crypto.bros, and a lot of them are crypto bros.

No, AI does not have a great potential for good. It has much more potential for harm, especially the genAI we have now.

highplainsdem

(61,057 posts)The AI bros didn't need to steal the world's intellectual property to do medical research.

They stole all that IP to sell fraudsters a better tool for fraud, and gullible people the illusion that they can at least pretend to be more knowledgeable, talented and creative than they are, without any effort. But take away the AI tool built on stolen work, and the charade falls apart. And the clueless AI users typically can't catch mistakes made by hallucinating AI, either.

snot

(11,598 posts)Among other things,

AI doesn't distinguish between true and false; LLM's in particular are just statistical number crunchers that string words together based on how frequently they appear together within the AIs' very limited data sets, tweaked to tell you whatever tends to maximize your engagement with the AI's.

AIs' data sets are stolen, and any particular guidelines programmed into them are devised in secret by people whose judgment and intentions I distrust (apparently the same geniuses who thought computers would never need to cope with years numbered past 1999).

AI can easily be used to manipulate you – it's already VERY good at that.

Most of the funding for AI development is coming from investors whose motivations I don't share – to achieve world domination, build scarier weapons, more effective propaganda, more addictive content, etc. And as we all know, those who write the checks get to prescribe the work product.

I personally have experimented with AI from time to time, and it has yet to give a correct answer on anything that's not totally elementary and formulaic; but instead of acknowledging its own limits, it concocts plausible-sounding, incorrect answers. Again, it doesn't know or care about the distinction between true and false (except based on some specific guideline programmed into it, which I may or may not agree with, only I can't be sure because its exact formulation is -- again -- secret).

There's also the concern re- job elimination, of course – which i.m.h.o. hurts not only those whose jobs are lost but also the customers they served. AI's I've had to deal with in seeking customer service, e.g., have merely wasted my time, serving as another maze to get through before reaching a human who can answer anything beyond the most routine question. Plus, just as importantly for me, I miss the human contact.

More and more, our interactions are forced into check-the-box answers that rarely fit the facts perfectly, our experiences into bland predictability; because the world according to AI is infinitely smaller than the gigantic, unimaginably complicated and diverse reality of which AI is only a teeny, tiny part – indeed, any AI is infinitely smaller and more primitive than the mind of any human being who's not a total vegetable.

Obviously, AI's will "improve" in at least some ways. But the situation reminds me of the births first of tv and then of the internet. In both cases, the potential good these technologies offered were mind-boggling, and initially, a lot of those benefits were realized. But TPTB quickly co-opted them and bent them to their own purposes. We still enjoy a lot of benefits from these mediums, but nothing like what could have been; and they're often used to facilitate propaganda, exploitation, etc.

I.m.h.o., regulation and much more transparency w.r.t. AI development and uses are imperative.

highplainsdem

(61,057 posts)tech bros who want to charge them much more for the hallucinating chatbots.

It's devolution.

WarGamer

(18,355 posts)When used properly it's like education on steroids.

It depends on what one uses AI for.

Pick a historical topic... use a high end AI model and ask about... the Battle of Hastings.

Here's an example:

The Battle of Hastings, fought on October 14, 1066, is arguably the most consequential event in the history of the British Isles. It didn't just change a king; it fundamentally rewired the DNA of the English nation and shifted the geopolitical gravity of Western Europe.

The Battle: A Summary

The conflict arose from a succession crisis following the death of King Edward the Confessor, who left no heir. William, Duke of Normandy, claimed the throne was promised to him, while the English Witan (council) elected Harold Godwinson.

Harold had just defeated a Viking invasion in the north (the Battle of Stamford Bridge) and had to rush his exhausted army 250 miles south to meet William. The battle lasted an entire day—unusually long for the medieval period.

The Tactics: The English held a formidable "shield wall" on Senlac Hill. The Normans, utilizing superior cavalry and archers, eventually broke the wall using "feigned retreats" to lure the English down from their defensive high ground.

But that's not impressive... it's basically a fancy Google Search.

But Google CAN NOT do this

User: But Gemini... what role did Williams wife and her family have?

William’s wife, Matilda of Flanders, was far more than a silent consort; she was a strategic powerhouse who provided the legitimacy, financial backing, and administrative stability William needed to succeed.

Matilda’s Family and Bloodline

Matilda was "high-born" in a way William (often called "the Bastard"![]() was not. Her family tree provided the prestige the Norman dynasty lacked:

was not. Her family tree provided the prestige the Norman dynasty lacked:

Royal Pedigree: She was the daughter of Count Baldwin V of Flanders and Adela of France (the daughter of King Robert II of France).

English Connection: Crucially for the invasion, she was a direct descendant of Alfred the Great. This allowed William to frame the conquest not just as a seizure of power, but as a restoration of a legitimate bloodline.

Strategic Alliance: Her father, the Count of Flanders, was one of the most powerful men in Europe. By marrying Matilda, William secured his northern border, allowing him to focus his military resources entirely on England without fearing a Flemish invasion.

Her Direct Role in the Conquest

Matilda was an active participant in the planning and execution of 1066:

The Flagship (The Mora): Matilda personally funded and commissioned William’s flagship, The Mora. It was the largest and mos(snip)

highplainsdem

(61,057 posts)all of it, in which case you haven't saved time. You've just been spoonfed a pablum version of history that could be riddled with errors.

And that tool works only because of intellectual property theft, and it uses much more electricity than other types of search, and it harms the websites it steals from. It directs wealth to the AI bros who are already billionaires happy to support Trump.

There's nothing positive about it.

WarGamer

(18,355 posts)Do you accuse a schoolteacher of AI theft when they download an article and read it?

Why not?

highplainsdem

(61,057 posts)it's theft. There's been plenty of evidence of that, from internal documents at different companies to a former Google CEO advising that AI startups should just steal IP and bet that they won't get sued unless their company is successful, and if they are successful they can afford enough lawyers to fight the lawsuits. I posted an LBN thread about that CEO. I've. been explaining this to people here for years.

And I realize most people who like AI would prefer avoiding the fact that these are unethical, illegally trained tools based on theft. I've had to point out to AI-using academics on X - academics worrying that AI tools they like to use will steal their work, and their students' work, for training - that they're being both foolish because they can't trust the AI companies not to steal it, and hypocritical because the only reason those tools work at all is because so much intellectual property was stolen before the tools were released. The value of those AI tools is almost 100% in the stolen IP, which the AI bros have no intention of paying for. OpenAI has admitted in court documents that they have no hope of ever making a profit if they have to pay for what they stole.

https://www.tomshardware.com/news/ai-doesnt-learn-like-people-do

WarGamer

(18,355 posts)How can anything public... be stolen?

highplainsdem

(61,057 posts)covered by copyright. It doesn't require registering a copyright.

And the "fair use" argument AI companies try to make is fallacious, too, because their tools are intended to compete with the creatives whose work was stolen.

snot

(11,598 posts)I believe that if the use is purely for educational, non-commercial purposes, those factors weigh stongly against liability under copyright law.

AI's are being developed for purposes of money and power.

WhiskeyGrinder

(26,746 posts)canetoad

(20,484 posts)"When used properly", but theres a helluvu lot of latitude in the definition of 'properly'. Medical and scientific use are, IMO legitimate; using it to influence people online is not.

WarGamer

(18,355 posts)WhiskeyGrinder

(26,746 posts)EdmondDantes_

(1,574 posts)There's also emerging evidence that relying on AI does make us not retain what we "learned" from it, presumably because we didn't have to think or engage with the work.

There's also some people who believe strongly that AI will bring about the end of humanity, and even if it doesn't it has the potential to destroy the economy.

And Bob at the Oil Pit paid for the books he read to learn. These guys are just stealing everything so it undercuts everyone else's work output. Sure someone putting up how to change your car's oil might put Bob out of business, but the scope of that is smaller. My company is preparing to slash our QA department because AI in theory will do much of the work.

usonian

(24,286 posts)

anciano

(2,227 posts)for obtaining information, evaluating ideas, and enhancing creativity.

![]()

rog

(932 posts)... the hospital financial dept tried gaslighting me --- went so far as to accuse me of requesting them to do 'fraudulent billing' when I asked them to correct the mistake.

In frustration, I submitted a very brief summary of the situation to ChatGPT (and I admit I have a love/hate relationship with AI at this point, but I had already put days into this and was at a loss). In 30 seconds I had a plan of action, who to contact and in what order, what to expect from their response, templates for formal letters of dispute and scripts for phone calls, a summary of the laws regarding my situation, how to escalate up the chain if I was denied, etc.

When I called back to the billing dept to initiate the claim it was immediately routed to one of the customer relations supervisors who called back immediately, complimented me on my knowledge of the system, told me to relay all this info to any friends who were on Medicare, etc. The matter was resolved in my favor in one day, without even having to submit a formal letter of dispute. Everyone (except the original collection agent, who only wanted to know when I was going to pay) was very nice and helpful, and I ended up learning a lot about how coding and billing works at a huge hospital. I also have the private number of the person who helped me resolve this, in case I have another problem.

I think AI is one of the main problems Google searches, as well as trying to get info from other search engines, are becoming more and more worthless. It helps to use search operators - like site: - but still, it sometimes takes hours to cut through the slop. I find it ironic that AI can actually help cut through that slop if you specify targeted searches, ask for links to primary sources, ask for professionally or academically verified results, etc.

I am getting more comfortable using AI like I use my computer; to help me organize, do a task, etc. That doesn't make it any less annoying, though.

Edited to add that I realize I could have paid a professional consultant hundreds of dollars for the expertise that AI 'stole'.

fujiyamasan

(1,454 posts)I don’t use it for AI “slop art” or whatever, but it saves me time on google searches and going through wiki rabbit holes when I’m curious about something. What I like is that after reading something either through the LLM or elsewhere I can use it to ask further questions.

If I then want to dig in depth I’ll look into primary sources.

Would I use it for some kind of safety critical application or something very complex? No, and I don’t think that’s what LLMs are intended for anyways.

BootinUp

(51,060 posts)highplainsdem

(61,057 posts)Doodley

(11,797 posts)It can quickly provide illustrations or graphs to support articles. When it comes to fiction, I'm an instant expert on any location my characters find themselves in and any interest that they pursue. I have even used it to give me options for names, favorite foods, and dialect for characters from far flung lands. It gives me the opportunity to focus on what I enjoy most with fiction---creating vivid characters and situations, rather than getting bogged down with research.